Precision and recall are the yin and yang of assessing the confusion matrix. Because the penalties in precision and recall are opposites, so too are the equations themselves. The recall rate is penalized whenever a false negative is predicted. Instead of looking at the number of false positives the model predicted, recall looks at the number of false negatives that were thrown into the prediction mix. To calculate a model’s precision, we need the positive and negative numbers from the confusion matrix. The more FPs that get into the mix, the uglier that precision is going to look. If there are no bad positives (those FPs), then the model had 100% precision. Precision looks to see how much junk positives got thrown in the mix. Precision is the ratio of true positives to the total of the true positives and false positives.

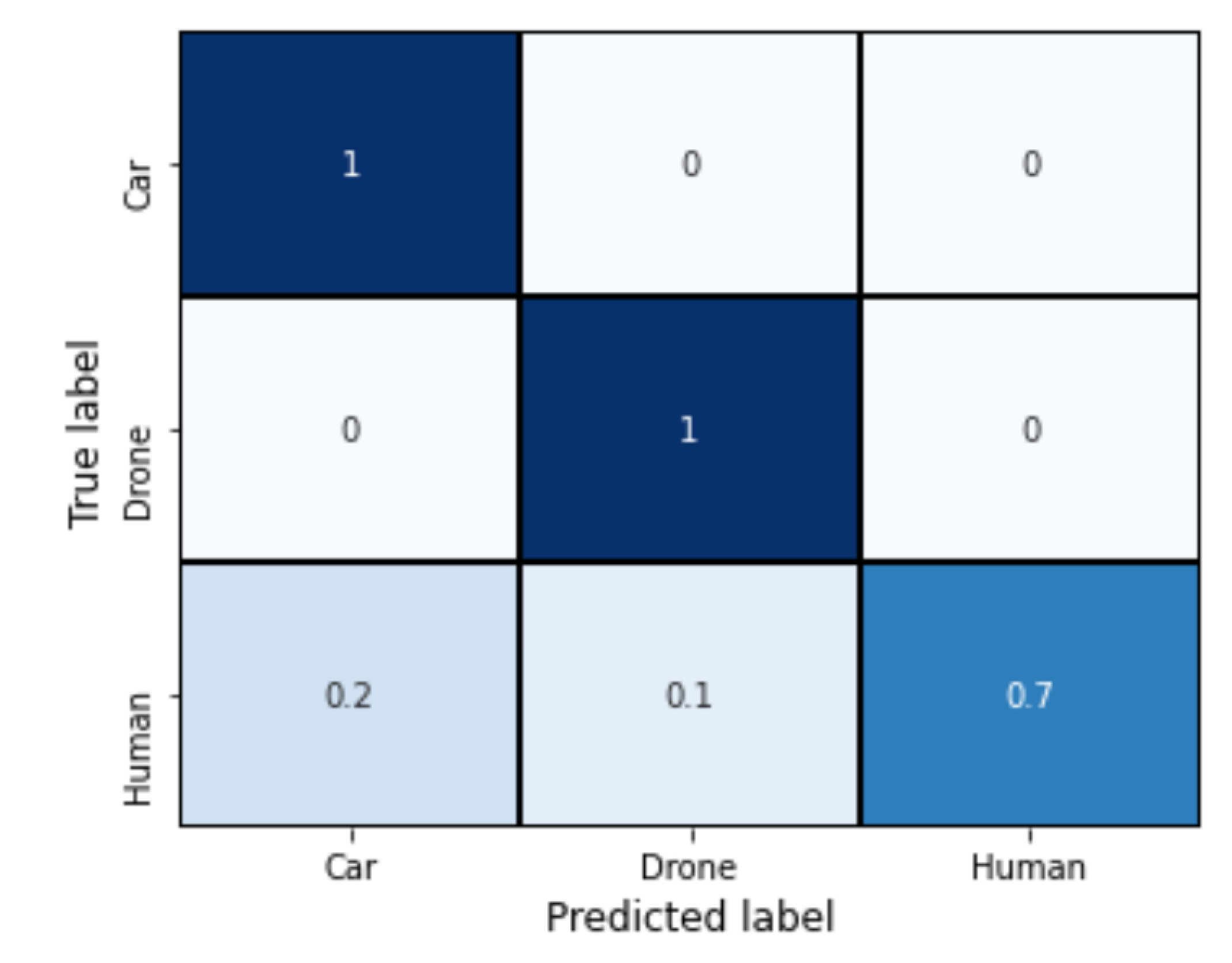

For instance, a matrix can be made to classify people’s assessments of the Democratic National Debate:Īll the predictions the model makes can get placed in a confusion matrix: They can be used on any number of categories a model needs, and the same rules of analysis apply. Non-binary classificationįinally, confusion matrices do not apply only to a binary classifier. So, they are going to try to classify more things than necessary to filter every nude photo because the cost of failure is so high. If a nude picture gets posted and makes it past the filter, that could be very costly to Instagram. The Instagram algorithm needs to put a nudity filter on all the pictures people post, so a nude photo classifier is created to detect any nudity. Weighing the cost and benefits of choices gives meaning to the confusion matrix. We would only want the model to make the decision if it were 100% certain that was the choice to make. But, if you added some stakes to the choice, like choosing right led to a huge reward, and falsely choosing it meant certain death, then now there are stakes on the decision, and a false negative could be very costly. The false positive means little to the direction a person chooses at this point. The False Positive cell, number 2, means that the model predicted a positive, but the actual was a negative.The False Negative cell, number 3, means that the model predicted a negative, and the actual was a positive.In this confusion matrix, there are 19 total predictions made. Using a confusion matrix, these numbers can be shown on the chart as such: A false negative works the opposite way the model predicts right, but the actual result is left.

If the model is to predict the positive (left) and the negative (right), then the false positive is predicting left when the actual direction is right.To do so, we introduce two concepts: false positives and false negatives. The purpose of the confusion matrix is to show how…well, how confused the model is. It can work on any prediction task that makes a yes or no, or true or false, distinction.

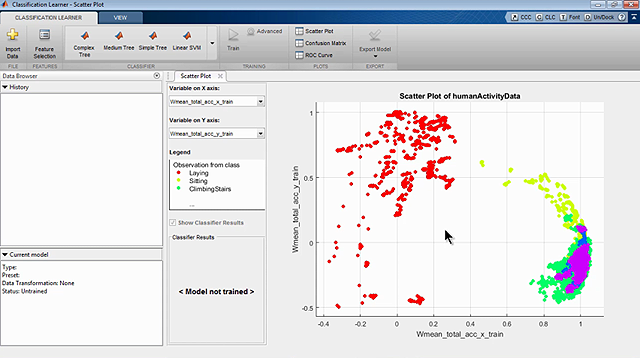

ARE THERE CONFUSION MATRIX IN MATLAB R2015A DRIVER

Let’s look at an example: A model is used to predict whether a driver will turn left or right at a light. The confusion matrix is used to display how well a model made its predictions. Confusion matrixīoth precision and recall can be interpreted from the confusion matrix, so we start there. Precision, recall, and a confusion matrix…now that’s safer. Sometimes, it may give you the wrong impression altogether. With it, you only uncover half the story. You know the model is predicting at about an 86% accuracy because the predictions on your training test said so.īut, 86% is not a good enough accuracy metric. Sometimes the output is right and sometimes it is wrong. You give it your inputs and it gives you an output. So, you’ve built a machine learning model.

0 kommentar(er)

0 kommentar(er)